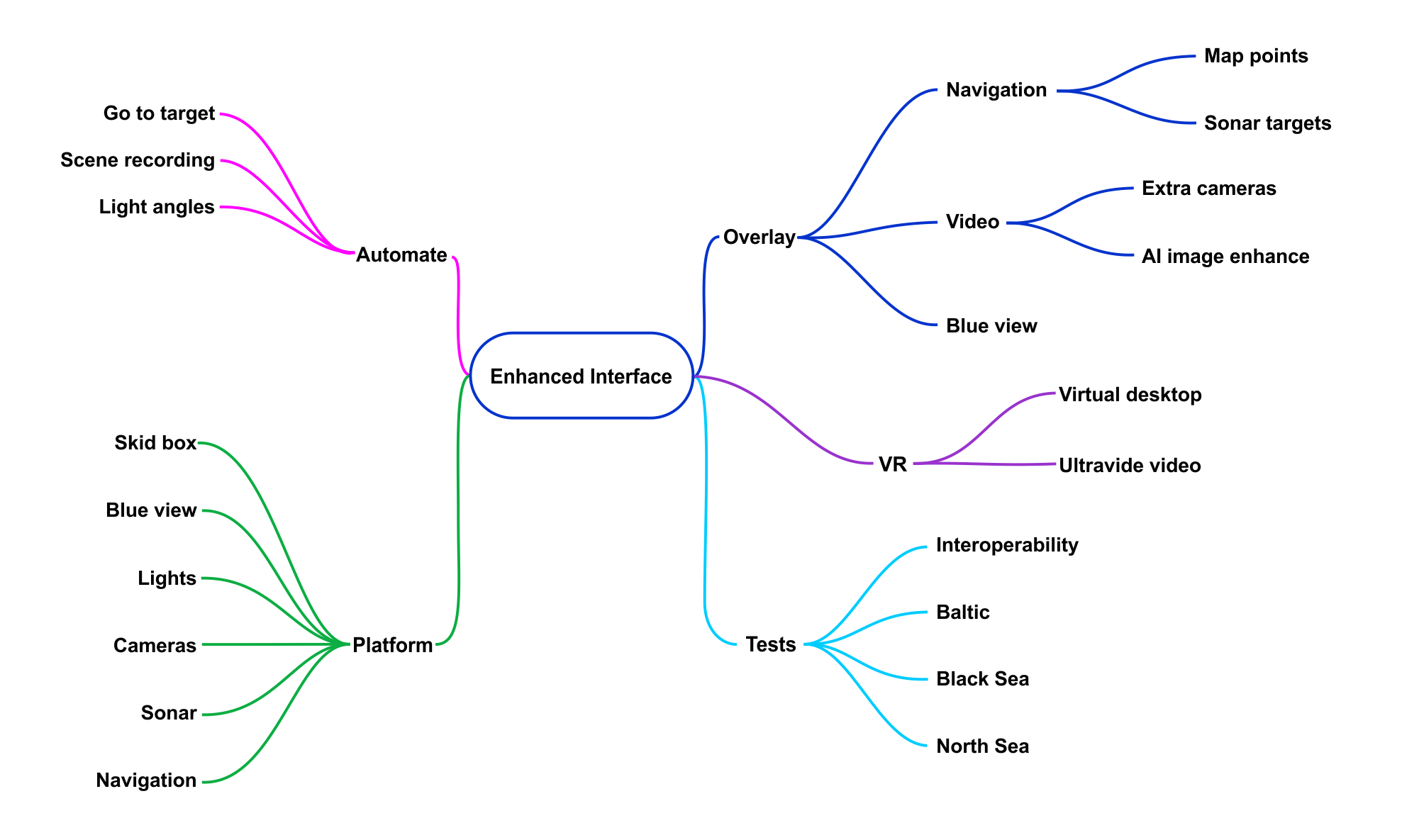

Workplan

|

WP1 Multisensory input for ROV

The first aim is the development of hardware platform providing the pilot with variety of acoustic and optical data, as well as providing non coaxial lighting.Task 1.1 Interface for data streaming from ROV. To conserve the bandwidth of existing umbilical cable an underwater hub will be develop to collect the data from sensors and stream it using Ethernet using one pair of wires in umbilical, via the digital modems. Deck unit able to receive the signals will be built using existing technology and forward it to data processing center onboard a vessel.

Task 1.2 Development of non-coaxial lighting. Underwater lighting system will be developed, able to being positioned by ROV around the investigated object, to provide light source not in line with the camera. Batteries will power lights, have light weight, and will be able to being deployed and retrieved by ROV, using commands transmitted by the pilot.

Task 1.3 Physical integration of sensors on ROV. Developed sensors, deployable lighting system and underwater hub for signal processing will be integrated either directly on ROV, or on separate frame attached to the robot (skid-box) the design will be optimized to provide minimum draught, and minimize hindrance to navigation and number of connections to the ROV.

Task 1.4 ROV Interoperability. Hardware platform of sensors will be prepared to operate with multiple ROVs models. Technical solutions to fit the interfaces and mechanical connections to both Saab SeEye Falcon owned by IOPAN and Vectorr M5 ROV owned by GEOECOMAR will be developed, creating more universal solutions for multiple model/makes of ROVs.

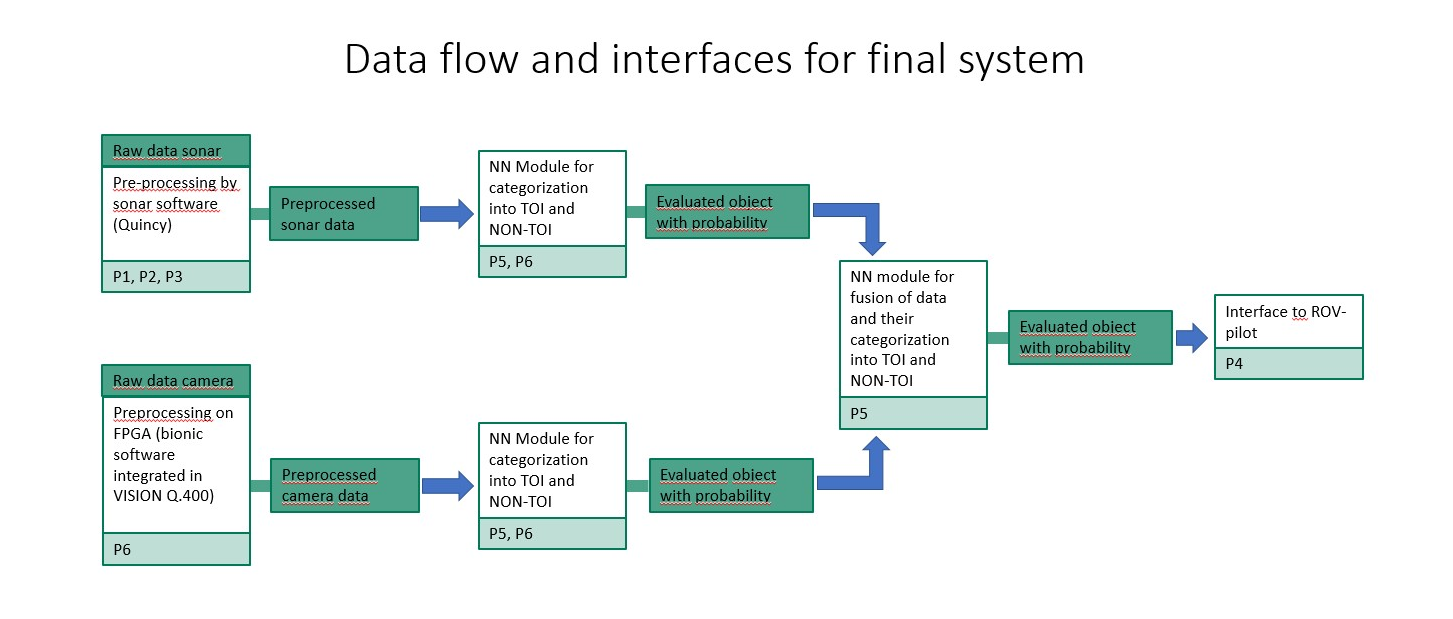

WP2 Data processing and integration

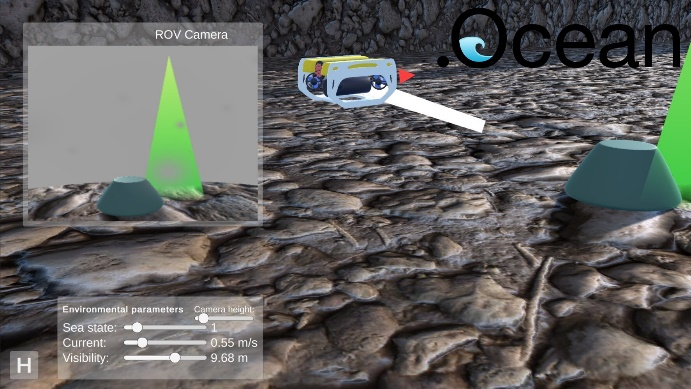

Multisensory data collected by ROV has to be processed to deliver a complex, integrated image to ROV pilot, enabling him/her aids to locating and identification of munitions on the seafloor. This will be achieved by online processing of optical imagery and integration of as many as possible other sensor data into one image that could be effectively used by the pilot. |

Task 2.2 Augmented reality overlay. A software interface will be built to create HUD overlay, combining camera view and digital objects display for the pilot.

Task 2.3 Navigation overlay. To eliminate the need of separate navigation screen, Position of robot relative to the vessel obtained via USBL will be translated to absolute geopositioning using QUINCY software. Positions of investigated objects will be also transferred to QUINCY to create a map that could be used in search pattern. Data will be sent then to HUD overlay, in form of heading and distance.

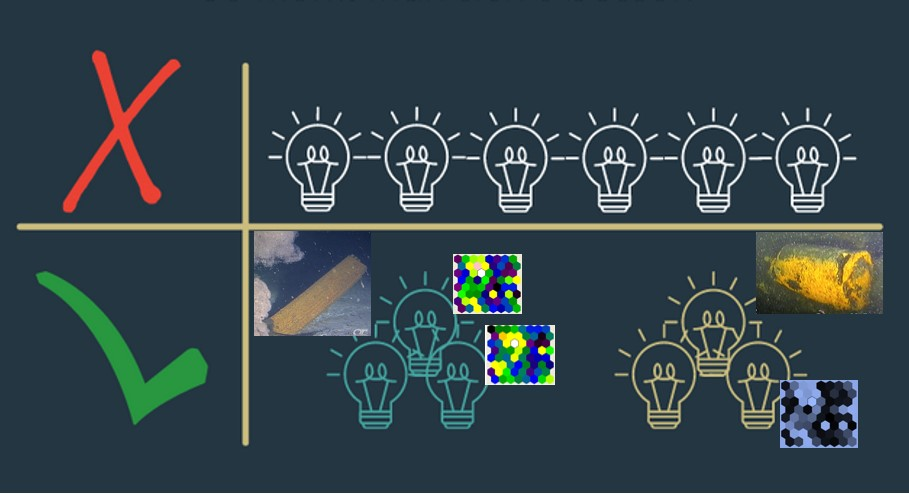

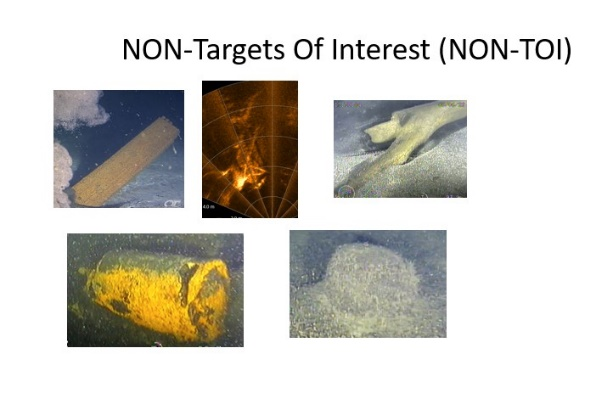

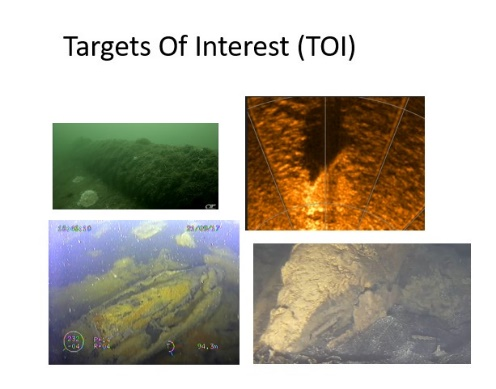

Task 2.4 Data processing by neural nets. Multiple sensor data will be analyzed for Targets of Interest (TOI) and NON-TOI. This will be used to train neural networks for target detection.

|  |

Task 2.6 Interoperability of data processing. Data overlay technologies will be prepared to join data streams coming from sensors not being a part of the hardware platform – i.e. other types of USBL navigation, other cameras or sonars. This will be tested on two ROV systems – owned by IOPAN and GEOECOMAR.

|

WP3 Virtual reality interface

Virtual reality interface will be constructed for ROV pilot, enabling him to track either integrated imagery or multiple sensors on virtual screens. Virtual dimension of the screen will be a lot larger than physical displays mounted in the operation center, hence providing greater details for ROV pilot, needed to operate in the sensitive environments of munition dumpsites.Task 3.1 Construct VR interface for ROV pilot. Virtual desktops and ultra wide screen. All sensor data will be displayed either in multiple virtual screens, enabling pilot the ability to observe both navigation, sonar and video data in one virtual environment, or magnified to enable detailed observation.

Task 3.2 Hands free Control system for VR. In order to enable the ROV pilot to control the virtual environment without additional manipulators or controllers, head and eye tracking mechanisms will be deployed. This will enable ROV pilot to constantly control the ROV by the standard interface, and at the same time control the virtual environments.

WP4 Task driven autonomous ROV control

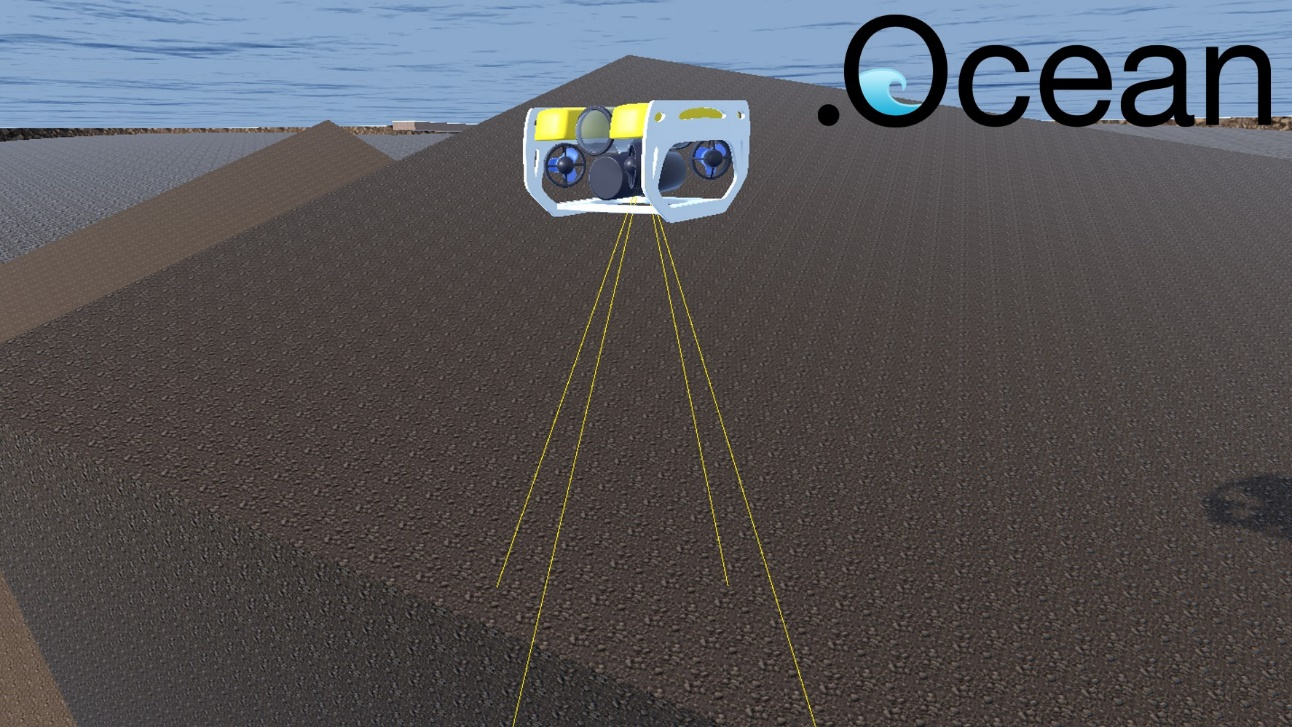

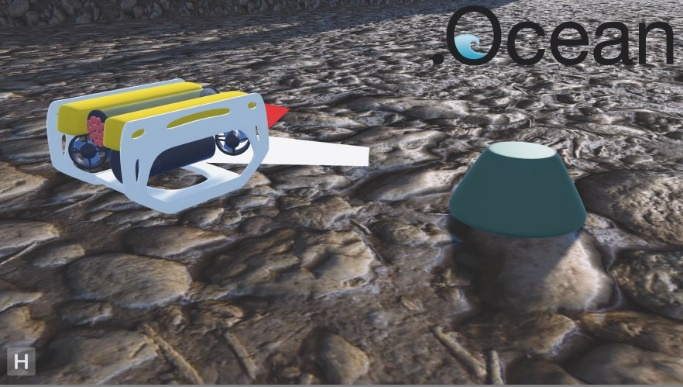

Development of a Task driven ROV controller based on a grey box hybrid control model. The tasks for improved ROV operations are hoovering, angle control, waypoint and line planning, grid navigation and dynamic positioning in a 3D environment with obstructions. |

Task 4.2 Simulator interface of the ROV. Generic driver will be developmed for ROV thrusters, interfacing of onboard sensors and positioning.. Develop a scalable control module.

Task 4.3 Task driven hybrid control algorithms. Development of a grey box ROV control model starting from a dynamic ROV model. The control algorithm will implement a list of predefined tasks like hoovering and line planning and allows. This Task will be mainly a conversion of the dotOcean AYB toolbox for underwater control. The model is hybrid and can learn from ROV operator instructions and human control actions. The model also takes external conditions like currents in the training loop.

Task 4.4 ROV simulator for training the model. Starting from a 3D underwater world based on metric localization and acoustic mapping, a Task driven ROV simulator environment will be developed.

Task 4.5 Testing the model trough simulation. Specific autonomous tasks will be developed to enable specific data collection for detection and characterisation of seabed or buried objects. The ROV model will be trained through simulation and annotation of ROV operator actions.

Task 4.6 Transferability from simulator to the real world. Evaluation in steps on how the simulator results are transferable to the real world. Are all important aspects for control stability covered in the simulator.

|  |

WP5 Tests and calibration

This WP aims to test the developed system in lab as well as in a wide range of environments.Task 5.1 Basin tests. Systems developed in Tasks 2, 3 and 4 will be tested in lab conditions, and evaluated. Performance will be adjusted as needed.

Task 5.2 Field tests. Tests will be run at in several campaigns at sea, at different depths and environmental conditions, to address the challenges imposed by different visibility, depth, and type of investigated objects. This includes the Baltic Sea and Black Sea, as well as the harbour site in the North Sea.